Semi-Annual Interim Performance Report

Grant Number HK-250616-16

The Jubilees Palimpsest Project:

Spectral RTI Technology for the Recovery of Erased Manuscripts from Antiquity

Todd R. Hanneken, Ph.D.

St. Mary’s University

September 30, 2017

The second semi-annual project period saw the completion of the work proposed for the first project year, and more. The following three paragraphs describe progress within the semi-annual project period in the areas of data processing, SpectralRTI technology, and dissemination, respectively. The following sections add accomplishments from the second to the first semi-annual period into a cumulative report that will eventually become the Final Performance Report

Data processing progressed steadily, centered around weekly conference calls dedicated to data processing. Todd Hanneken (St. Mary's University) facilitated the discussion, summarized progress in updates to the entire project team, and provided feedback from the perspective of philology and humanities scholarship. Keith Knox (Early Manuscripts Electronic Library, EMEL) first completed batch processing of all pages using "recipes" proven on other palimpsests, namely Pseudocolor and Sharpie, except for C73inf for which Ruby was more effective. Subsequently, Knox focused efforts on developing software to correct for registration errors introduced by some of the filters used in capture. Roger Easton (Rochester Institute of Technology, RIT) and a student summer intern, Nicole Polglaze, focused on advanced supervised processing using ENVI software. This advanced processing was completed for some pages of C73inf, four of the five pages of F130sup, all seven pages of H190inf, and all ten pages of O39sup. Twenty pages of S36sup and remaining pages of C73inf are underway in the third semi-annual project period. The calls also included Ken Boydston (MegaVision), Michael Phelps (EMEL), and Josephine Dru (EMEL), all of whom contributed insight from their areas of expertise in capture hardware, object handling, and philology. In addition to the notes from the calls, Hanneken kept archival "Processing Guides" which consolidated notes from the image scientists and scholars (http://palimpsest.stmarytx.edu/AmbrosianaArchive/Guides/). Since the data is freely accessible with a Creative Commons license, it may be hoped that these guides will be of use to independent imaging specialists who may wish to apply their own processing techniques (see dissemination, below). Hanneken also performed processing using the SpectralRTI_Toolkit, which offered some alternative color enhancements (Extended Spectrum and PCA Pseudocolor), and added RTI interactivity to the images created by the scientists. All images were published with the International Image Interoperability Framework (IIIF) Image and Presentation APIs on the image repository (http://jubilees.stmarytx.edu). The number of IIIF Image API images (including color enhancements and raking light) is now 4363. The number of WebRTI images is now 1467. The number of IIIF Presentation manifests is eight (C73inf Latin Moses, C73inf Latin Commentary on Luke, A79inf Petrarch, F130sup Greek Commentary on Luke, H190inf unidentified undertext, O39sup Origen's Hexapla, S36sup Gothic Bible, Tests from Startup Phase).

Development of SpectralRTI technology and the SpectralRTI_Toolkit for ImageJ progressed in the three areas of documentation, training, and software development. Hanneken wrote and published an initial release of documentation for SpectralRTI and the SpectralRTI_Toolkit (http://jubilees.stmarytx.edu/spectralrtiguide/). The documentation is also published on GitHub should anyone wish to derive their own versions or contribute improvements to the documentation. As of the first release (July 2017), the documentation is quite complete in content but in need of images and other polish to be more user friendly. In addition to the published documentation, Hanneken successfully trained early adopters from two independent teams on the use of the Toolkit, Kathryn Piquette (University College London) and Sarah Baribeau (Lazarus Project and EMEL). Meanwhile, significant progress has been made on the rewrite of the Toolkit from an ImageJ macro to an ImageJ2 Java plugin. Although the plugin is not yet ready for release due to relatively peripheral bugs, the basic functionality and expected performance improvements (especially memory management) are on track. The coding work has been contracted to the Center for Digital Humanities at Saint Louis University, where Bryan Haberberger is the lead Java developer. Their development fork is available on GitHub. Saint Louis University reports 350 hours of work on software development, and that they are not concerned about doing more than the budgeted work because of their shared interest in the success of the product.

Dissemination of project activities progressed along several avenues. Hanneken has been posting project milestones, particularly publication of images, on Twitter (twitter.com/thanneken). Google seems to reach the widest audience so far, and several scholars have made contact with the project director with various inquiries. Hanneken participates in the monthly IIIF Manuscript Group conference calls, on which a wide range of innovations relevant to the project are discussed. In June, Hanneken presented at the first annual conference of Rochester Cultural Heritage Imaging, Visualization, and Education (R-CHIVE.com). Surrounding the two-day public conference were five additional days of meetings. One significant contribution of the project that became apparent at the meeting is the openness of the data. Rochester's emphasis on imaging science goes back to the glory days of Kodak and Xerox. Today, many students are working on digital image processing of cultural heritage, but lack complete data sets with which to work. Because the data is made available online without any impediment (such as registration or email request) and generously licensed, many imaging specialists will be served by and hopefully serve in turn the humanities interest of the project. This and other connections made at the conference and workshops can be expected to have long-term direct and indirect benefits.

One practical "lesson learned" worth reporting is that Amazon Simple Storage Service (S3) proved not to be effective for storing data for the IIIF image repository. This low-cost service requires transfer of a complete file to the main processing instance before any of it can be read. The key benefit of the Jpeg2000 backend of the IIIF Image server is that only the tiles with the region and detail required need be read and processed. The solution was to move the data from Amazon S3 to Amazon Elastic File System (EFS). This service is more expensive, but savings in other project areas should suffice to cover the additional cost.

Accomplishments for the first period surpassed goals, partly because the project director had a full-year sabbatical that gave extra flexibility in time. The proposal outlined goals by years, rather than half-years. The first-year goals remaining for the second semi-annual period lie mostly in publishing documentation for general use and rewriting the software from ImageJ Macro Language to Java. The former is on target and the latter is under contract with the Saint Louis University Center for Digital Humanities.

The activities below match those described in the proposal, with the addition of designing and building an arc to automate Spectral RTI captures. The breadth of audiences served surpassed expectations. We believe this is because the news of receiving a grant from the NEH raised awareness of the project even before we had something to show for the work. There were no personnel deletions and some volunteer additions.

The overall budget appears to be on track, with greater expenses in data management equipment (due to anticipated expansion in scope) and lesser expenses for food in Milan (due to favorable currency exchange rates).

The challenges and lessons learned were reasonable and adequately addressed. The biggest single problem was a lost/delayed piece of luggage, which we addressed by assigning part of the team to locate the bag and part to replace the items. Success converged from both directions almost simultaneously after delay of about one day. Milan is a major city but still posed challenges for finding replacement parts. A long-term lesson learned is to rely less on international transport of equipment; we are networking toward building a consortium of imaging projects in northern Italy that can share imaging equipment.

The Spectral RTI Toolkit works with ImageJ to process Spectral (narrowband) and RTI (hemisphere) captures into RTI and WebRTI files. Project activities cover five areas. First, the alpha-version of the Toolkit was published on GitHub (https://github.com/thanneken/SpectralRTI_Toolkit). Second, the Saint Louis University Center for Digital Humanities (http://lib.slu.edu/digital-humanities) was contracted to develop the toolkit from its current state as an ImageJ macro into a Java plugin for ImageJ2. The proposal describes this work as “Java Developer,” and Saint Louis University was selected for its past accomplishments in coding digital humanities projects, particularly those related to the shared interest in the International Image Interoperability Framework (IIIF). The contract covers the proposed activities (recode the plugin) within the budget, and assigns any excess effort to improving the WebRTI viewer to operate on a IIIF backend. Third, the Toolkit is being maintained and improved in light of the needs of users within and beyond the project. In particular, the project director supported Kathryn Piquette (University College London, Advanced Imaging Consultants http://www.ucl.ac.uk/dh/consulting/advanced-imaging-consultants) in implementing the Spectral RTI Toolkit on her independent projects using a different imaging system. The PhaseOne imaging system is the major alternative to the MegaVision system used by our team. Success in establishing shared compatibility was not surprising, but a valuable accomplishment nonetheless. Fourth, documentation has been published on the project website http://jubilees.stmarytx.edu/spectralrtiguide/, along with GitHub to facilitate derivatives and contributions from others, (https://github.com/thanneken/SpectralRTI_Toolkit/tree/master/Guide). Fifth, training began with early adopters Kathryn Piquette and Sarah Baribeau.

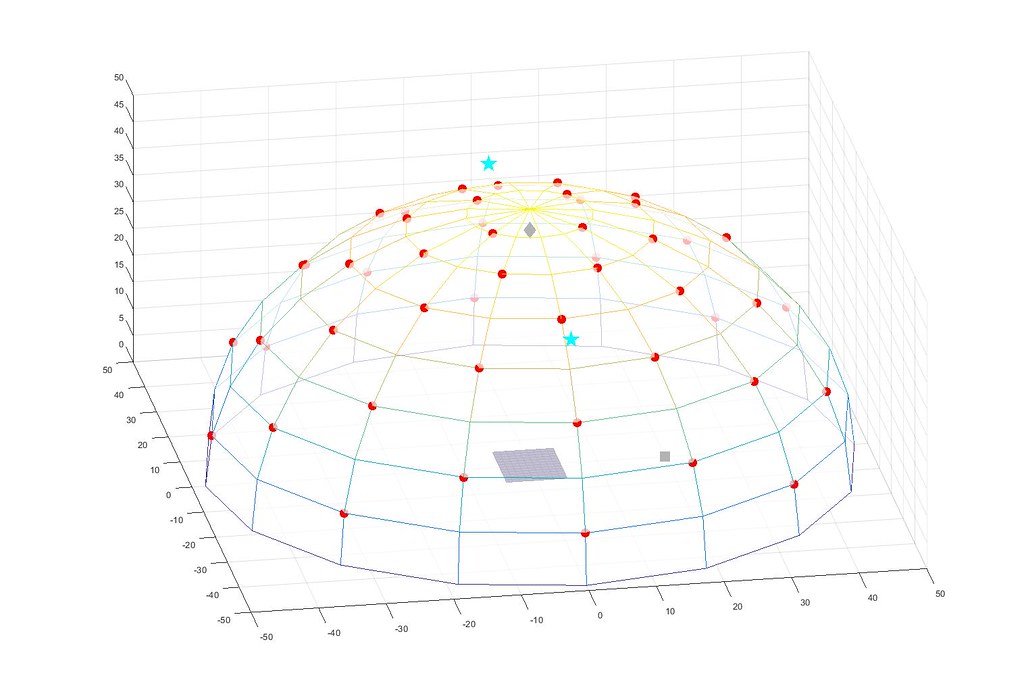

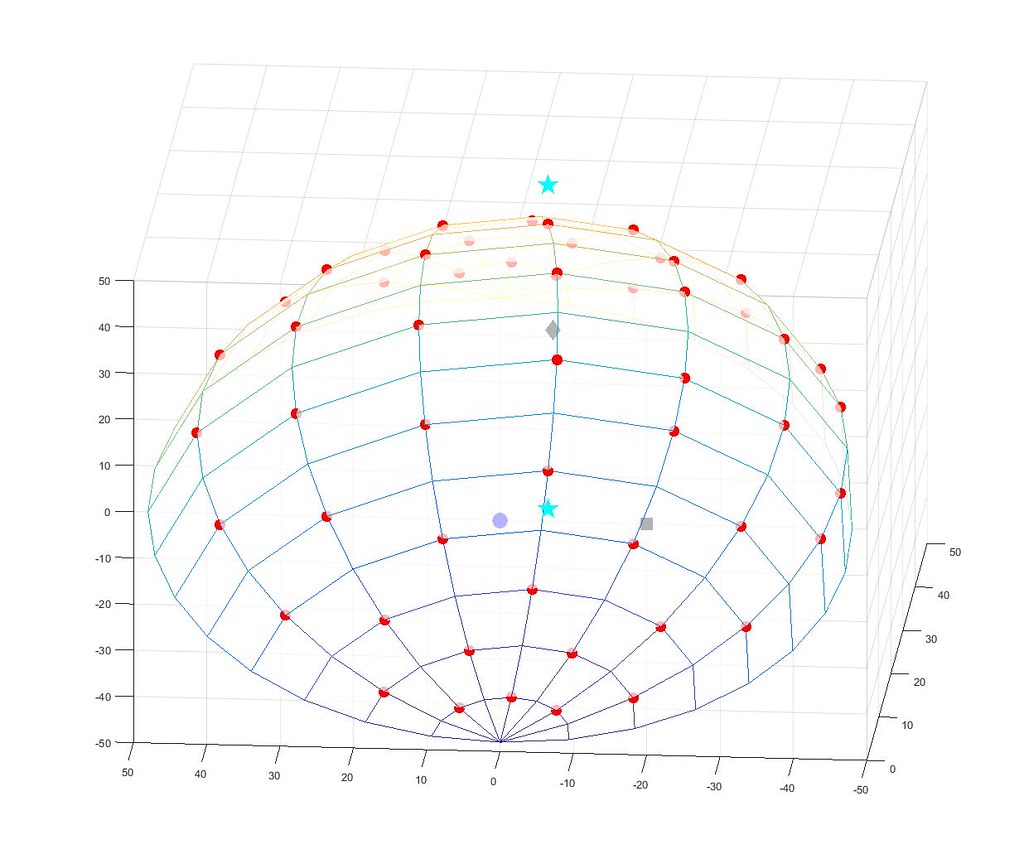

We worked hard and achieved success beyond the proposed activities in designing and building an arc to automate Spectral RTI captures. Capturing data for RTI images requires a real or virtual dome of discrete lights around the object. This can be done with a handheld flash, which requires manual positioning for each of fifty or so captures, and requires the light positions to be calculated from a reflective hemisphere for each page. It can also be done with a dome with lights fixed to known positions, but the diameter of the dome must be several times the diameter of the page, which is about eight feet for manuscripts. Such domes are unwieldy and interfere with safe object handling.

Through a series of conference calls and models (physical and Computer Aided Design, see appendix), our team designed an arc that has the major advantages of a dome but takes less space and can move out of the way. The arc pivots on the light stands already used by spectral imaging. The arc slots into to seven positions that do not change from one sequence to the next. The arc holds sixteen lights, of which the odd and even numbered lights fire on alternating arc positions. The result is that fifty-six images capture the reflectance of the object when illuminated by evenly-distributed positions around a virtual hemisphere. The time required for hemisphere captures for RTI decreased from almost twenty minutes in the startup phase to less than five, while increasing the number of captures from thirty-five to fifty-six. The greater number of hemisphere captures increases texture resolution and decreases the impact of shots corrupted by shadows from the camera stand. The current cost to produce the arc is about $5000 and should retail for about $8000. Several buyers are already pursuing orders so we can expect the cost to decrease with production optimizations. Comparative data for the cost of RTI domes is not available. The cost of a handheld flash could easily surpass $1000 for a quality flash with battery pack and radio trigger (an infrared trigger would corrupt spectral imaging captures). Even at $8000 the arc adds tremendous functionality for a fraction of the cost of a spectral imaging system (easily $100,000).

The team traveled to Milan and captured complete Spectral RTI data for all 144 pages of the Jubilees Palimpsest, plus early modern notes archived with the Jubilees Palimpsest and samples from five additional palimpsests in the Ambrosiana. The team consisted of the seven proposed participants and benefitted from additional volunteer effort from team members extending their time commitment and additional partners assisting at their own expense. The proposed team members were Todd Hanneken (project director), Anthony Selvanathan (graduate researcher from St. Mary’s University), Michael Phelps, Damianos Kasotakis, Roger Easton, Keith Knox, and Ken Boydston. Additional volunteers were Dale Stewart and Giulia Rossetto.

The travel to Milan originally scheduled for March 2017 was moved ahead to January 2017. This saved money and increased time availability of team members on site. We were able to rent three apartments in the same building, which worked very well. The favorable exchange rate helped us stay well within budget.

The narrowband spectral captures were increased to fifty-two captures per page in response to particular properties of the chemical reagent that was used early in the nineteenth century. The narrowband captures included fourteen bands of narrowband reflectance from ultraviolet to infrared, four bands of transmissive illumination, and a total of thirty-four fluorescence captures. The fluorescence captures included four different wavelengths of illumination and seven different filters plus additional variants at different exposure settings when the chemical reagent caused regions to differ radically in reflectance. The hemisphere captures for RTI amounted to fifty-six images per page. A total of 108 images were captured for all 144-pages of the Jubilees Palimpsest in just less than three of the four weeks in Milan.

With the remaining time we imaged the forty-six pages of non-palimpsest front matter and early modern notes archived with the Jubilees Palimpsest. Because these pages pose no challenges to legibility we used a reduced thoroughness (but still super archival quality) of sixteen images per page. We also sampled pages from other palimpsests in the Ambrosiana collection to aid demonstration of the utility of Spectral RTI and to probe the potential for future advanced imaging projects at the Ambrosiana. The objects selected were: an illumination from Petrarch’s Vergil that includes a crypto-script signature illegible to the human eye (A79 inf), an unidentified Greek commentary on the Gospel of Luke (F130sup), a palimpsest with several unidentified undertexts (H190inf), Origen of Alexandria’s six-column edition of versions of the book of Psalms (Hexapla, O39sup), and Wulfila’s fourth-century translation of the Epistles of Paul into Gothic, including a liturgical calendar (S36sup). The objects were selected to appeal to a broad range of scholarly, popular, and political constituencies.

In total we captured 239 pages, mostly at a rate of 108 captures per page, 50 megapixels per capture, 16 bits per pixel. Capture and on-site processing generated seven terabytes of data in Milan.

The data generated was archived for accessibility, functionality, and clarity for the immediate team and for posterity. For each capture, three formats are archived. First, the raw data from the camera in digital negative (dng) format was immediately set to read-only and archived for posterity should any of our subsequent processing decisions be questioned. Second, the data was “flattened” (corrected for aberrations in lighting based on a plain white calibration target). This data is most useful to the scientists for processing. Third, the flattened data was gamma-corrected to match the perception bias of the human eye. These gamma-corrected images are necessary for processing designed for human consumption. This data is somewhat redundant in that the later could be rederived from the former. We are considering ways to reduce this redundancy without sacrificing accessibility. The question is how easily, consistently, and reliably posterity will be able to rederive the derived data. In the meantime, all three are considered archival, along with the calibration captures.

Extensive capture metadata is encoded into the EXIF headers of the captured images. We supplement this metadata with an XML file for each page that includes all the EXIF metadata for each image in the sequence, while grouping together data that is constant for all shots in the session or image sequence. Additionally, illuminator sequence codes meaningful to the team may not be meaningful to posterity so they are elaborated in companion tags using a namespace specific to spectral imaging.

Data preservation and integrity was preserved at various levels. First (and most often overlooked) we countered the threat of “bit rot” by using checksums on the file system level and redundant file system metadata by using the B-Tree File System (BTRFS). Checksums are also used in verifications and duplications using rsync. Second, we countered the threat of drive failure by using RAID 1 or 10 redundancy in the definitive archives and backups. Third, we countered the threat of losing an entire computer or piece of luggage by distributing backups across locations. Several cities could be destroyed in the next world war and our data will survive.

The archival data is publicly available for specialists apart from the IIIF image repository described below, which serves a much wider audience. The data archive is available at http://palimpsest.stmarytx.edu/AmbrosianaArchive. Like all grant products, the data is accessible without any kind of encumbrance (e.g., account creation, cookie stalking) under a Creative Commons license (CC BY-SA for everything created solely by the Jubilees Palimpsest Project and CC BY-NC-SA for objects owned by the Biblioteca Ambrosiana).

Data processing can be grouped into two end goals. The first is to create a digital facsimile that captures the present state of the artifact as accurately as possible. This kind of accuracy is useful to students and scholars who do not have first-hand access to the artifact, and to future conservators and scholars who will not otherwise have precise information on the state of the artifact in 2017. Accurate digitization of first-hand experience is done with high-resolution color using ten wavelengths within the visible spectrum. From this data accurate color images were created in the LAB (preferable for archival quality) and sRGB (preferable for compatibility and accessibility) color spaces. These derivative files have 24-bit color depth. Accurate spatial resolution is achieved by avoiding Bayer or other filters, and by using an apochromatic lens. Accuracy in texture is achieved by using transmissive light (as if holding the page up to a light) and capturing reflectance of light originating from different angles (raking light images and eventually RTI, as if moving a light around the object).

The second major end goal is to surpass first-hand experience for reading illegible text, marginalia, and other features. Some of these follow standard recipes and some involve case-by-case labor. The standard recipe included with the Spectral RTI Toolkit is Extended Spectrum, which essentially squeezes ultraviolet and infrared into the visible spectrum and optimizes contrast. Another standard recipe was created by imaging scientist Keith Knox to deal with the particular problems of the reagent-saturated palimpsest. This method, called RuBY takes its name from the formula of taking Royal blue fluorescence divided BY transmissive. It has proven effective at reading illegible text in the palimpsest. Two additional recipes developed by Knox, Sharpie and Pseudocolor, were applied to the palimpsest samples other than C73inf. All of the processes described thus far (Accurate Color, Extended Spectrum, Ruby, Sharpie, and Pseudocolor with raking and transmissive light variants and WebRTI) have been completed and published for all pages captured. Additional supervised processing has been completed for the supplemental palimpsests except S36sup. One technique, called PCA Pseudocolor, is built into the Spectral RTI Toolkit. Although this processing can be done by anyone with default settings, we are taking our time to optimize quality. The most advanced technique requires a feedback-loop between scholars and scientists. The chief scholar Todd Hanneken and the scientists Keith Knox and Roger Easton are conducting weekly conference calls to discuss processing recipes and focused efforts. The processing guides created through this collaboration are archived, publicly accessible, and discoverable through search engines: http://palimpsest.stmarytx.edu/AmbrosianaArchive/Guides/. A related document studies the paleography of the Jubilees Palimpsest by grouping together legible examples of each letter: http://palimpsest.stmarytx.edu/AmbrosianaArchive/Guides/LatinMosesPaleography.html.

Together with the Department of Network Services at St. Mary’s University, the project director created a IIIF image repository on an Amazon Web Services EC2 instance with EFS primary storage, Amazon S3 backup storage, Amazon CloudFront international caching, and Domain Name Service for http://jubilees.stmarytx.edu. As described in the proposal, this arrangement is ideal for the predominantly off-campus traffic of the project and the potential need for elasticity if usage spikes with media coverage. Lessons learned include the inadequacy of Amazon S3 for primary storage of Jpeg2000 files because the need to transfer the whole file before reading any of it defeats the advantage of Jpeg2000 that only the region and resolution required need be read and processed. Because CloudFront caches web pages for twenty-four hours it is essential to double-check all data before uploading it to the Amazon EC2 instance.

The project director tested open source alternatives for the Jpeg 2000 backend of the IIP image server. Unfortunately, quality, performance and reliability were acceptable only with the commissarial alternative (Kakadu), which is the one thorn in the side of an otherwise entirely open-source project. Once the IIP image server was compiled with the Kakadu Jpeg 2000 libraries and the Apache configuration adjusted, the IIIF Image API compliance was ready.

IIIF Presentation API manifests were written with placeholder data in advance of the capture session and filled in as data was created. This allowed many images to go live before the capture session was complete. One challenge with the IIIF Presentation API is that even Mirador, which was specifically designed for IIIF manifests, does not fully support the standard. The short-term solution was to create a new IIIF Navigator using Leaflet and JQuery (http://jubilees.stmarytx.edu/iiifp/). A more sustainable approach is to work with a branch of Mirador created by UCLA Libraries (http://jubilees.stmarytx.edu/uclamirador/). With some adjustment and mild compromises in the manifests, this approach offers a more sustainable path to future development.

The reason that the project data pushes the limits of Mirador is that many resources describe each page. For the Jubilees Palimpsest we have old microfilm, an 1861 edition as a scanned image and as a TEI XML transcription, a new project transcription, existing translations, and a new project translation. We also have WebRTI in several color processes, and the spectral image cube with various color enhancements, raking light positions, and transmissive illumination. This goes far beyond the typical model of a “page turner” use of Mirador in which each page is one and only one image.

XML transcriptions and translations were created for Latin Moses. TEI compliance and additional transcriptions were assigned to a graduate researcher.

Activities to support media coverage surged after the NEH announcement in August 2016. Coverage is listed below under accomplishments.

The audiences served can be grouped into three categories: 1) scholars of the ancient literature being recovered; 2) digital humanists interested in the capture and processing technology, or similarly the publication technology; and 3) general interest and popular media.

Scholarly interest has come through the team members and from presence on the Internet leading individuals to contact the project director. The team members are all well established in their respective specializations, so word has spread quickly. The November meeting of the Society of Biblical Literature in particular was an excellent opportunity to identify and begin collaboration with scholars specializing in the various texts imaged. Other scholars have come to us based on searching the Internet or reading our publications. Working at the Ambrosiana for a month was a great opportunity to network in the field and demonstrate our capabilities.

The first print publication of an image we captured appeared on the cover of Alexey Eliyahu Yuditsky, A Grammar of the Hebrew of Origen's Transcriptions. Israel: The Academy of the Hebrew Language (2017).

Similarly, word about our technologies for capture, processing, and publication spread through professional networks and the Internet. For example, the Gregory Heyworth of the Lazarus Project also does spectral imaging with MegaVision, and was one of the first to learn about the new Spectral RTI arc and software to process the data captured therewith. Giulia Rossetto is an imaging specialist who worked on the Sinai Palimpsests Project and donated some of her time to assist our project in Milan. Kathryn Piquette is an RTI specialist expanding into spectral using the PhaseOne system, and has begun using our software with her own captures. The project director demonstrated the project and the IIIF Navigator interface (designed to accommodate the large number of resources per page) to the monthly community video conference of the Manuscript Group of the IIIF community. He also presented on texture imaging at the first annual conference of Rochester Cultural Heritage Imaging, Visualization, and Education (R-CHIVE).

There has been significant popular media interest in the project. See especially the publications noted above.

We measure our success by our ability to answer the following questions.

Yes. Beyond the limited tests from the startup phase we demonstrated that the technique is feasible in the capture phase and effective in the processing and publication phase. The equipment problems we did encounter had nothing to do with the addition of RTI. We were able to conduct the capture at a steady pace consistent with other spectral imaging projects. The technology works efficiently and consistently combines the advantages of spectral imaging with the advantages of RTI.

Yes. By using the MegaVision RTI arc we can conduct Spectral RTI in less time than it takes to do RTI alone using the hand-held flash method. The RTI sequence of 56 captures takes 4-5 minutes. The sustained rate of capture (including object mounting, 52 spectral captures, 56 RTI captures, breaks, occasional trouble shooting, visitor interruptions) averages 20 minutes per page. The arc also saves about five to ten minutes of processing time compared to determining light positions of a handheld flash from a reflective hemisphere.

Yes. This is not a major goal until the third project year, but we were already able to work with Kathryn Piquette to capture and process images from Spectral RTI. The fact that she is working in the UK on a different system (PhaseOne rather than MegaVision) is a good start to expanding our reach. The technology has also been adopted by the Gregory Heyworth of the Lazarus Project at the University of Rochester and Sarah Baribeau of EMEL. Our goal is the opposite of forming a monopoly on the technology.

This is a long-term goal. Right now it works. We really need some online tutorials and some interface polish. We plan to measure how many people we reach through the non-specialist interfaces using software designed to analyze Apache web server logs. The logs are being archived but have not yet been processed. We are not tracking users through cookies. Google Analytics is used only for the main page.

This is the most interesting question to the image processing scientists. Progress on this test case would have broad application. So far we have decent progress with a new technique called Ruby (Royal blUe fluorescence divided BY transmissive). We also have an understanding of why it is so difficult (because the undertext, overtext, and reagent are all made of the same iron gall ingredients). We do not yet have a slam-dunk universal solution.

This is the most interesting question to the scholars of Jubilees and the Testament of Moses. After a very short time, it seems we can add at least a few words to what Ceriani could read in 1861. Whether this will have a major impact on our understanding of the original composition remains to be seen. Perhaps more intriguing is our ability to correct Ceriani’s reading. At that time editors took liberties with reconstruction and claiming to see what they expected to see. As we accumulate evidence that Ceriani was a “loose” editor every reading he proposed comes into question and will be subject to additional scrutiny. Work here has barely begun. We started working on the regions Ceriani did not claim to read and only accidentally noticed that some of the surrounding text does not fit his reading or clearly reads otherwise.

At this early stage I can assert that I think the repository and interface is a great teaching tool. Our graduate researcher was certainly awestruck when we let him interact with the first enhanced images. Additional evidence will be gathered here in future reports.

We have all the data we need to proceed with the rest of the project periods. We can demonstrate the utility of the technology to scholars and prepare the technology for widespread adoption.

Additionally, we are building a strong case for returning to the Ambrosiana to image more palimpsests. The Ambrosiana seems to be comfortable collaborating with U.S. institutions (Notre Dame and the Jubilees Palimpsest Project) as the digital library side of their mission while they continue their non-digital work. We are also building a network of contacts and a case for establishing a consortium of imaging project in northern Italy. This would save much money on transport and troubleshooting of equipment while allowing individual teams to pursue a diversity of approaches with different collections.

We seem poised to make long-term impacts on scholars of ancient literature and on image capture, processing, and publication teams. Evidence will be added here as it becomes available.

The project website works and includes basic processing of all pages. Advanced supervised processing has a strong start and is ongoing. IIIF Presentation manifests have been created for all the palimpsests imaged.

The ImageJ macro edition of the SpectralRTI_Toolkit and documentation are now available. It will benefit from development in performance and user interface thanks to the contracted work with the Saint Louis University Center for Digital Humanities and future training and feedback sessions.

See above.

The design with two low pivots was built:

The “carousel” design could also be useful in permanent facilities with adequate space and overhead structure.